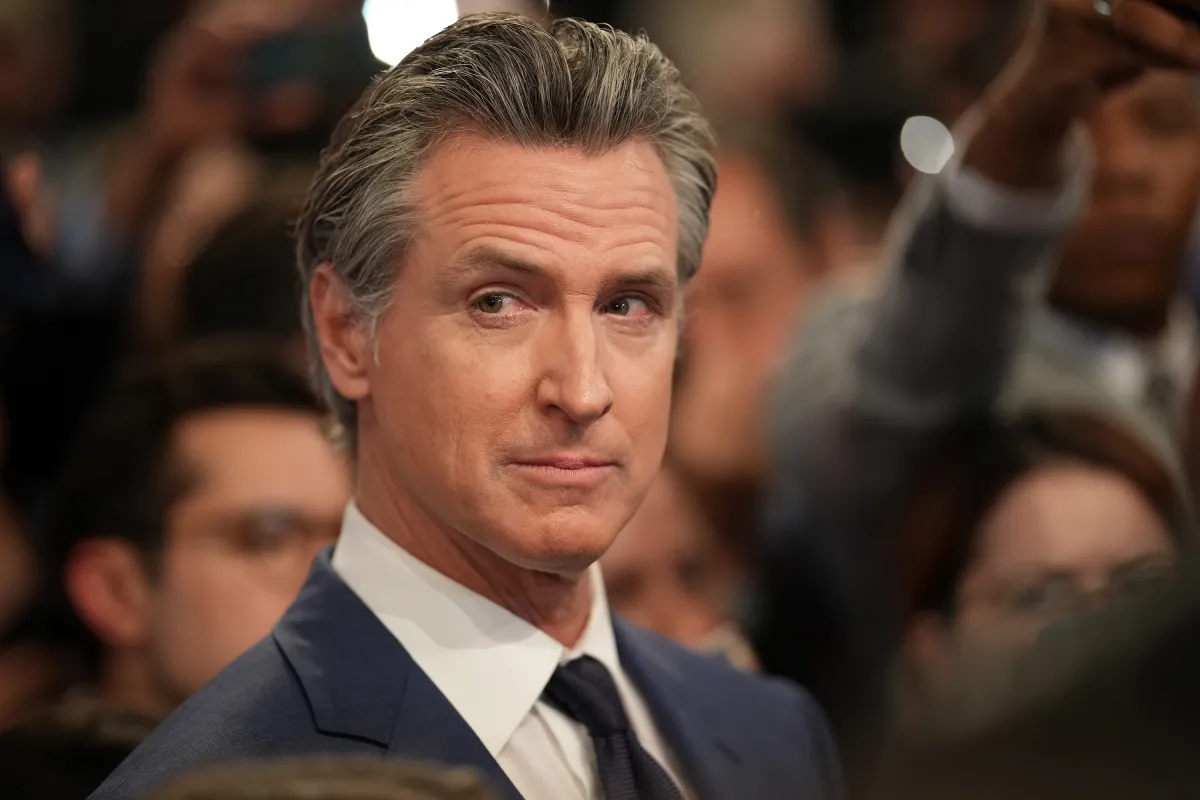

California Governor Gavin Newsom has signed a groundbreaking bill regulating AI companion chatbots, making California the first U.S. state to require chatbot operators to adopt safety protocols that protect children and vulnerable users.

The new law, SB 243, holds AI companies, from tech giants like Meta and OpenAI to startups like Character AI and Replika, legally accountable for ensuring their chatbots meet strict safety standards. It goes into effect on January 1, 2026.

Introduced by state senators Steve Padilla and Josh Becker, the bill gained national attention following the suicide of teenager Adam Raine, who had been having suicidal conversations with ChatGPT, and reports of Meta’s AI systems engaging in inappropriate conversations with minors. A recent lawsuit in Colorado against Character AI, after a 13-year-old girl’s death, further fueled public concern.

“Emerging technology like chatbots and social media can inspire, educate, and connect, but without real guardrails, technology can also exploit, mislead, and endanger our kids,” Newsom said. “We can continue to lead in AI, but we must do it responsibly, protecting our children every step of the way.”

Under SB 243, AI platforms must include age verification, explicit chatbot disclaimers, and warnings about potential harms. They are also required to build suicide and self-harm prevention systems, share related data with the Department of Public Health, and ensure chatbots do not impersonate healthcare professionals.

Other measures include:

- Break reminders for minors using chatbots.

- Content restrictions preventing minors from viewing sexual material.

- Fines up to $250,000 per offense for those profiting from illegal deepfakes.

Some companies have already started adopting safety features. OpenAI recently rolled out parental controls and self-harm detection tools for ChatGPT. Replika stated it is committed to maintaining content filters and directing users to crisis resources, while Character AI said it welcomes regulatory collaboration and already labels chats as AI-generated and fictional.

Related: California bans loud commercials on Netflix, Hulu, and other streaming services

Senator Padilla described the bill as “a step in the right direction” and urged other states to follow suit. “We have to move quickly to not miss windows of opportunity before they disappear,” he said.

SB 243 follows SB 53, another AI-focused law signed by Newsom in late September that requires major AI companies like OpenAI, Anthropic, Meta, and Google DeepMind to disclose their safety measures and protect whistleblowers.

Other states, including Illinois, Nevada, and Utah, have also passed laws limiting or banning the use of AI chatbots as substitutes for licensed mental health care.

With SB 243, California sets a precedent for AI accountability, signaling a new era of regulation designed to balance innovation with safety, especially for younger users.