For years, tech leaders have promised AI agents that can autonomously handle software tasks for users. But today’s consumer agents, like OpenAI’s ChatGPT Agent or Perplexity’s Comet, still fall short. To bridge that gap, researchers and investors are turning to reinforcement learning (RL) environments, simulated workspaces where AI agents can practice and improve at multi-step tasks.

These environments function like “boring video games,” simulating real-world software use. For example, an agent might be trained in a simulated Chrome browser to purchase socks on Amazon, with rewards given when the task is completed correctly. The complexity of capturing unexpected errors and providing useful feedback makes RL environments much more challenging to build than static datasets.

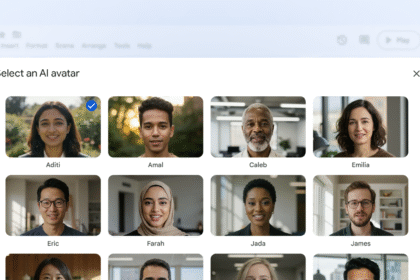

Related: Google Brings Gemini AI to Chrome for Everyone in the U.S.

Big players and startups alike are rushing into the space. Scale AI, Mercor, and Surge are investing heavily, while startups like Mechanize and Prime Intellect are trying to carve out leadership positions. Mechanize is already working with Anthropic and offering engineers huge salaries to build sophisticated RL environments, while Prime Intellect is targeting open-source developers with a “Hugging Face for RL environments.”

Investors are betting that RL environments could be as crucial for agent development as labeled datasets were for the chatbot boom. Anthropic has even considered spending over $1 billion on RL environments, according to reports.

The open question is whether RL environments will scale effectively. While reinforcement learning has powered breakthroughs like OpenAI’s o1 and Anthropic’s Claude Opus 4, skeptics point to challenges like “reward hacking” and high computational costs. Even Andrej Karpathy, an investor in the space, has cautioned that while environments are promising, RL itself may not deliver endless progress.

For now, one thing is clear: RL environments have become the latest frontier in the race to build truly capable AI agents.